4 minutes

UCRL2

Introduction

In a Markov Decision Process (MDP) M with finite space S and finite action space A, a learner in some state ’s' choose some action ‘a’ and receives a reward ‘r’ independently from some probability distribution in [0-1] with mean r_hat(s,a). And then according to some transition probability it transits to some state s'.

We need to form such a learner that always chooses optimal action which maximizes the expected reward.

Until Now, we have considered few ways to do so. All of them follows a general policy which is

- Intialize a random policy

- Repeat 3 - 4

- Evaluate the current policy i.e find state-value function / action-state-value function for current policy

- Move greedily or e-greedily according to current state-value function / action-state-value function and improve current policy.

So we are considering the performance of the learned policy.

In UCRL or UCRL2 we are interested in performance of the learning algorithm during learning. We compare the reward collected by the algorithm with the reward of the optimal policy

We can define the undiscounted accumulated rewards as

$$ R(s) = \sum r_t $$ where s is the starting state

Above defined reward is a random variable wrt to the stocastic MDP.

The expected accumulated reward is define as

$$ \sigma(s) = Lim_{T -> inf} (1/T) * E[R] $$

where s is the starting state

Note that this is dependent on intial state

We need to form a policy that maximizes the axpected accumulated reward or average reward.

Here we define a new term Diameter of MDP : It is defined as the maximal expected time taken to move from any state s to any state s' using an appropiate policy for all s and s'.

$$ D(M) = max_{s!=s'} min_{all \pi} E[ T(s'| M,\pi,s) ] $$

Communicating MDP : These are MDPs with finite Diameter.

For communicating MDP, optimal average reward is independent of intial state

$ \sigma = Lim_{T -> inf} (1/T) * E[R] $ is independent of intial state

Regret after time T is :- $ T * \sigma - R $

UCRL2 ALGORITHM

UCRL2 is a extension of UCRL hence it also works on “OPTIMALITY IN THE FACE OF UNCERTAINITY”.

UCRL2 defines a set of pausible MDPs based on the observation so far, and choose the optimal MDP $ \hat{M} $ and the optimal policy for the optimal MDP $\hat{M}$.

In steps 2 and 3 : UCRL2 computes estimates $ \hat{ p_k(s'|s,a) } $ and $ \hat{R_k(s,a) } $ for the mean state transition probability and mean reward from the observation made before episode k.

In step 4 : A set of statistically pausible MDPs are defined in term of condifidence interval for mean state transition probability and mean reward

In step 5 : Extended Value Iteration is used to find optimal MDP and optimal Policy.

In step 6 : The policy found in step 5 is executed. Episode k ends when a state-action pair, (s,a) has been visited in current episodes as many times as before current episode.

Extended Value Iteration

Let M be the set of MDPs with same state space S and same action state A, mean transition probability $ \hat{ p}_k(s'|s,a) $ and $ \hat{R}_k(s,a) $ for mean rewards such that for any MDP in M with transition probability $ \tilde{ p}_k(s'|s,a) $ and reward function $ \tilde{R}_k(s,a) $.

$$ || \hat{ p}_k(s'|s,a) - \tilde{ p}_k(s'|s,a) < d(s,a) || $$

$$ || \hat{R}_k(s,a) - \tilde{R}_k(s,a) < d'(s,a) || $$

We can proof that if we combine the whole set of MDP, M into asingle M' with extended action space A' then finding optimal policy in M' is equivalent to find optimal MDP in M and its corresponding optimal policy.

Let the state value of the ith iteration be $u_i(s)$. Then undiscounted extended value interation :-

$$ u_0(s) = 0 $$

$$ u_{i+1}(s) = max_{a} ( \tilde{r}(s,a) + max_{p} ( p[s'] * u_i[s'] ) ) $$

where $ \tilde{r}(s,a) = \hat{r}(s,a) + d'(s,a) $

Note the optimism in face of uncertainity

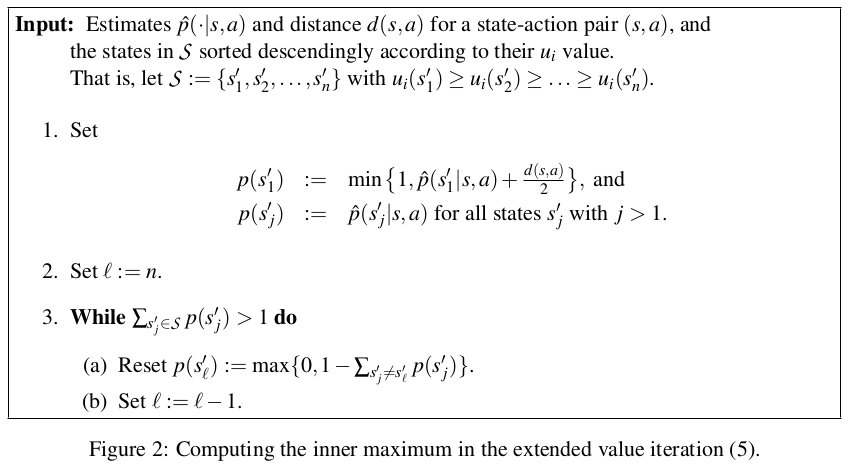

Now, in inner maximum can be efficiently computed using above given equation.

Questions :-

Page 1 :- We are interested in performance of the learnning algorithm during learning.

page 7 :- How the new MDP containing whole set has continuous action state.

page 9 :- How does inner maximum work